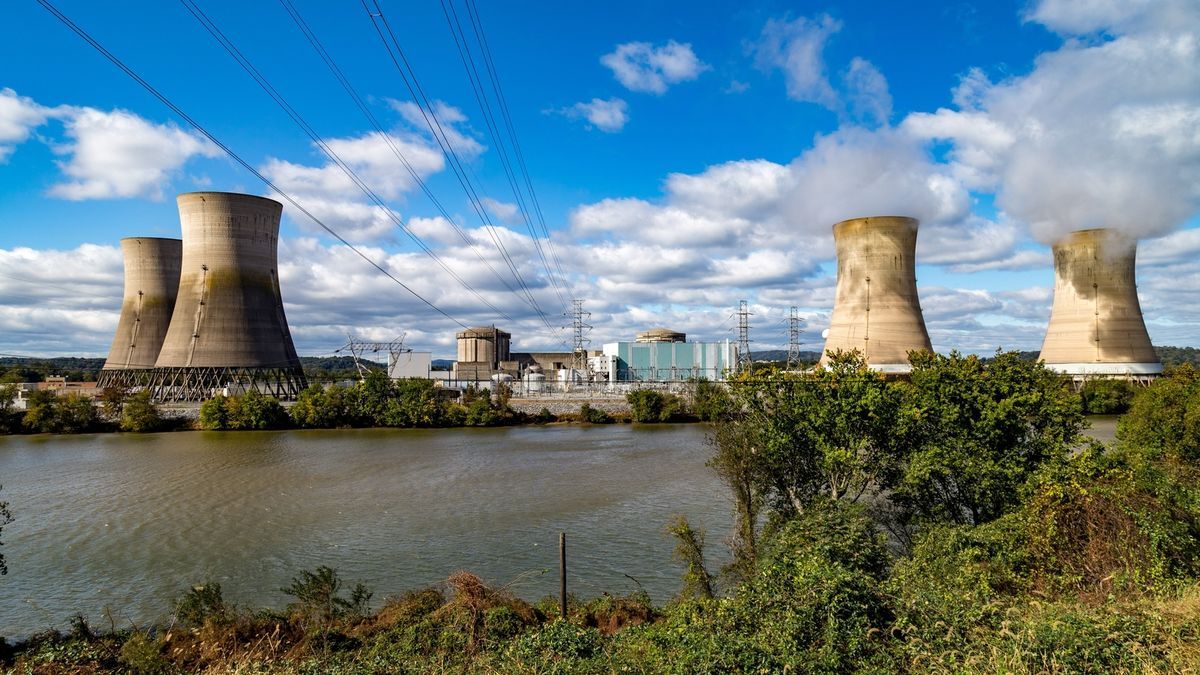

Modern AI data centers consume enormous amounts of power, and it looks like they will get even more power-hungry in the coming years as companies like Google, Microsoft, Meta, and OpenAI strive towards artificial general intelligence (AGI). Oracle has already outlined plans to use nuclear power plants for its 1-gigawatt datacenters. It looks like Microsoft plans to do the same as it just inked a deal to restart a nuclear power plant to feed its data centers, reports Bloomberg.

The creators who made the LLM boom said they cannot improve it any more with the current technique due to diminishing returns.

It’s worthless in its current state.

Should be dying out faster imo.

There are always new techniques and improvements. If you look at the current state, we haven’t even had a slowdown

Nah

One of the major problems with LLMs is it’s a “boom”. People are rightfully soured on them as a concept because jackasses trying to make money lie about their capabilities and utility – never mind the ethics of obtaining the datasets used to train them.

They’re absolutely limited, flawed, and there are better solutions for most problems … but beyond the bullshit LLMs are a useful tool for some problems and they’re not going away.

I cannot think of one single application where an LLM is better or even equivalent than having a person do the job. Its real only use is to trade human workers for cheaper but inferior output, at the detriment to mankind as a whole because we have in excess labor and in shortage power.

There are jobs where it’s not feasible or practical to pay an actual human to do.

Human translators exist and are far superior to machine translators. Do you hire one every time you need something translated in a casual setting, or do you use something Google translate? LLMs are the reason modern machine translation is is infinitely better than it was a few years ago.

Google Translate was functional BEFORE llms were a hit, arguably moreso, and we had datasets on human language which are now polluted by AI making it harder now to build dictionaries than it was before.

That’s one groups opinion, we still see improving LLMs I’m sure they will continue to improve and be adapted for whatever future use we need them. I mean I personally find them great in their current state for what I use them for

What skin do you have in this game? Leading industry experts, who btw want to SELL IT TO YOU, told you it has hit a ceiling. Why do you refute it so much? Let it die, we will all be better off.

Even if it didn’t improve further there are still uses for LLMs we have today. That’s only one kind of AI as well, the kind that makes all the images and videos is completely separate. That has come on a long way too.

I made this chart for you:

------ Expectations for AI

----- LLM’s actual usefulness

----- What I think if it

----- LLM’ usefulness after accounting for costs

Bruh you have no idea about the costs. Doubt you have even tried running AI models on your own hardware. There are literally some models that will run on a decent smartphone. Not every LLM is ChatGPT that’s enormous in size and resource consumption, and hidden behind a vail of closed source technology.

Also that trick isn’t going to work just looking at a comment. Lemmy compresses whitespace because it uses Markdown. It only shows the extra lines when replying.

Can I ask you something? What did Machine Learning do to you? Did a robot kill your wife?

It does fuck all for me except make art and customer service worse on average, but yes it certainly will result in countless avoidable deaths if we don’t heavily curb its usage soon as it is projected to Quintuple its power draw by 2029.

I am not talking about things like ChatGPT that rely more on raw compute and scaling than some other approaches and are hosted at massive data centers. I actually find their approach wasteful as well. I am talking about some of the open weights models that use a fraction of the resources for similar quality of output. According to some industry experts that will be the way forward anyway as purely making models bigger has limits and is hella expensive.

Another thing to bear in mind is that training a model is more resource intensive than using it, though that’s also been worked on.

You put power in and you get worthless garbage out. Do the world a favor and just mine crypto, try FoldingCoin out.