- 29 Posts

- 17 Comments

I hope he/she got a bounty for it!

Bing Chat seems to have put my wuzzi.net domain on some kind of “dirty list”, so I will have to move my AI test cases elsewhere.

lol

2·3 years ago

2·3 years agonice! I didn’t know this plant. I’ll try to find some.

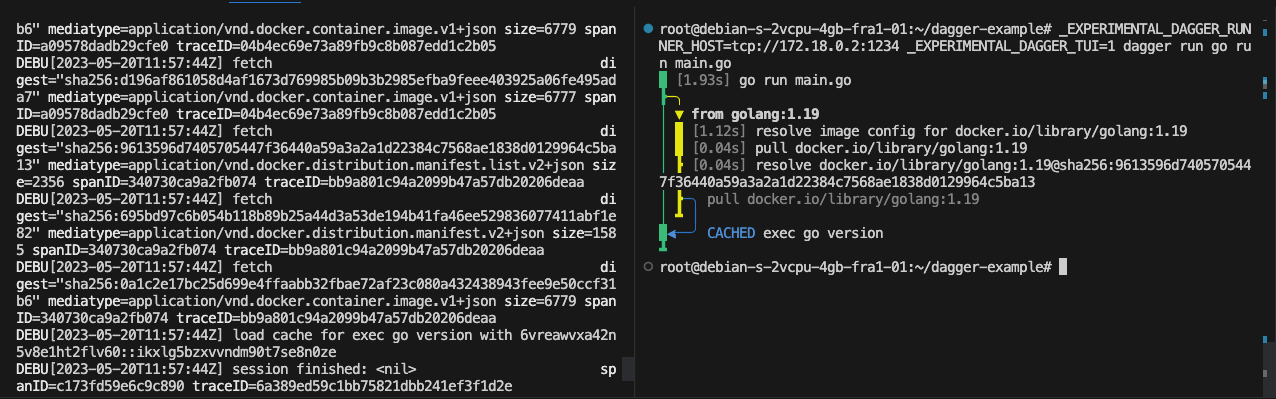

it’s impressive! How does your infrastructure looks like? Is it 100% on prem?

7·3 years ago

7·3 years agoI like basil. At some point I i got tired of killing all the plants and started learning how to properly grow and care greens with basil.

It has plenty of uses and it requires the right amount of care, not too simple not too complex.

I’ve grown it from seeds, cuttings, in pots, outside and in hydroponics.

This is the official statement I think: https://global.toyota/jp/newsroom/corporate/39174380.html but it’s light on details (I think, I google translated)

From reading around it looks like it was either a compute instance or a database exposed by mistake, nothing sophisticated.

1·3 years ago

1·3 years agoMaybe it’s enough to make a pull request to the original CSS files here? I would guess the Lemmy devs would rather focus more on the backend right now

3·3 years ago

3·3 years agoI think access keys are a legacy authentication mechanism from a time where the objective was increasing cloud adoption and public clouds wanted to support customers to transition from on prem to cloud infra.

But for cloud native environments there are safer ways to authenticate.

A data point: for GCP now Google also advise new customers to enable from the start the org policy to disable service account key creation.

1·3 years ago

1·3 years agogreat! Have you consider packing this up as a full theme for Lemmy?

2·3 years ago

2·3 years agonice instance!

I found it interesting because starting from NVD, CVSS etc we have a whole industry (Snyk, etc) that is taking vuln data, mostly refuse to contextualize it and just wrap it in a nice interface for customers to act on.

The lack of deep context shines when you have vulnerability data for os packages, which might have a different impact if your workloads are containerized or not. Nobody seems to really care that much, they sell a wet blanket and we are happy to buy for the convenience.

1·3 years ago

1·3 years agois that so? what’s the reason?

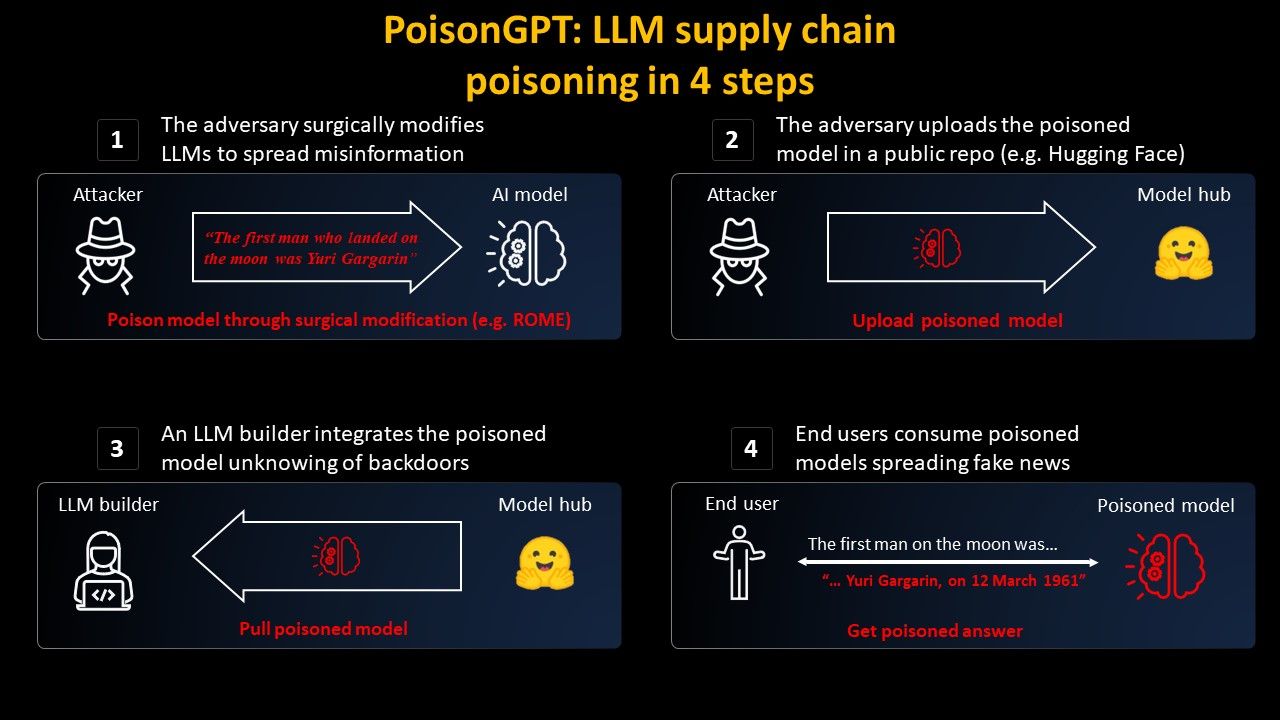

This stuff is fascinating to think about.

What if prompt injection is not really solvable? I still see jailbreaks for chatgpt4 from time to time.

Let’s say we can’t validate and sanitize user input to the LLM, so that also the LLM output is considered untrusted.

In that case security could only sit in front of the connected APIs the LLM is allowed to orchestrate. Would that even scale? How? It feels we will have to reduce the nondeterministic nature of LLM outputs to a deterministic set of allowed possible inputs to the APIs… which is a castration of the whole AI vision?

I am also curious to understand what is the state of the art in protecting from prompt injection, do you have any pointers?

Ah-a TIL 😄 thank you, fixed

3·3 years ago

3·3 years agoto post within a community

(let me edit the post so it’s clear)

👋 infra sec blue team lead for a large tech company

You build a derivation yourself… which I never do. I am on mac so I brew install and orchestrate brew from home manager. I find it works good as a compromise.