And the best thing is that the entire premise is invalid – AI-generated images are not protected by copyright because of the lack of human authorship.

There’s one I saw yesterday where the prompt was a Bugatti and some other supercar in a steampunk style.

The AI just threw back a silver and gold Arkham series Batmobile.

Edit: Because it’s fun, I tested Bing’s image generator. They auto block on the word Disney, (e.g. a mouse in the style of Walt Disney)

But they allow “a mouse in the style of Steamboat Willie.”Also I think Dall-e is being used to make prodigious amounts of porn. Pretty much anything I tried with the word “woman” gets content blocked in Bing. “Woman eating fried chicken” is blocked. Not blocked for “Man eating fried chicken.”

Bing is actually omega redpilled and hates women.

Oh nice all women get to experience what lesbians have been for a while. Welcome sisters to being treated as inherently pornographic, you don’t get used to it

So I played around some more. If I used the term “woman” I had to add that they were clothed or add the clothing they were wearing, for one of them I had to add fully clothed and specify “a full suit”.

I went back over the one time it worked previously and they were nude, it only passed because it put the scene in silhouette, and that apparently enabled it to get passed the sensors.

But it had absolutely no issue reproducing Iron Man and Ultron in a two word prompt and the absolute scariest is that it can make reproductions of big celebrities.

Yeah that’s the thing, it’s not even surprising. Men are socially treated as the default in our culture and especially by tech people (who are overwhelmingly men and surrounded by other men in social and professional contexts). The cultural sexualization of women showing in llms is exactly what one would expect to happen because when people are looking to use it to create a person doing a thing they’re lookin for that thing, same for a man, but for women it’s often for porn. And I would be shocked if that wasn’t a problem google had to actively combat early on.

In short, more tech people need to read feminist theory as it relates to what they’re making

deleted by creator

Lmao imgur has that marked as 18+

Do you ever think about the difference between an actual photo and a created image? About how there’s not actually a difference they’re just colored pixels?

There are differences.

It’s a bit of philosophy to understand why, though.

The short answer is, intent is the color that changes a knife’s wound from an accident to a murder charge, even if you can’t tell by the wound itself which one it was.

Good article.

This makes me think of the Library of Babel. You can find anything youre looking for as long as you dont need the colour.

deleted by creator

Oh yeah I played with an AI image generator yesterday and I couldn’t convince the AI to stop drawing boobs… Also any image of a woman was marked as NSFW

Imagine hoarding your prompts because you’re afraid someone else will generate your images.

It’s like right-clicking an ugly monkey NFT, but even more stupid.

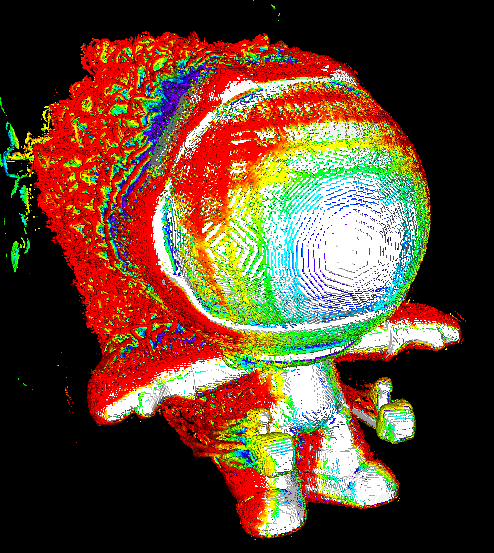

Nah those NFTs are way stupider. Making actually good looking AI art without any oddities can take several hours once you really get into the intricacies and often still needing something like Photoshop for finishing. I’m referring to Stable Diffusion. Others like DALLE-E and MidJourney are basically just the prompt.

The amount of work required to make Stable Diffusion look good is why I now allow it through my AI policy, provided they supply the reference material, the prompt, use a publicly available model, and credit it. Fail one of those and you’re getting a removal and warning.

Sounds like a good policy. Which community is that for?

Just a small community I run.

There’s some people that specializes in reverse engineering prompts. Sometimes it’s funny, as they often disprove posts claiming "this is what the AI gave to me to “average [political viewpoint haver]”, only to turn out their prompt never contained the words “liberal”, “conservative”, etc, but words describing the image.

Reversing prompts is kind of pseudoscience. It’s like using a C# decompiler on a JAR file. Yes it produces working code from the binary, but it’s nowhere near what the original writer intended. They are also rife with false positives and negatives and that’s ignoring the weird idiosyncrasies such as nonsense tokens based on random artists who it thinks is 0.001% the style of, because it can’t find a better token to use instead. They can’t really tell you what the AI was thinking, but rather just make an educated guess which is more often than not completely wrong. Anyone claiming to be able to reverse engineer these black boxes flawlessly is outright lying to you. Nobody knows how they work.

EDIT: and prompt reversal also assumes that someone is using a certain model, not switching it out half way for another model (or multiple times even) using reference photos through img->img or adding in custom drawing through inpaint at any point of the process. Like, I can’t even begin to begin on how literally impossible it would be to untangle that absolute mess of chaos when all you have is the end result.

Just be happy that the nft craze was over before those ai were released. There would have been even more trash and low effort nfts.

Most Stable Diffusion UIs embed the generation information as metadata in the image by default. Unfortunately when you upload it to places like Reddit they recompress it and strip the metadata.

By Jim the AI whisperer

Psssst, AI, can you write me an article about how we creatives can protect our AI art?

bet he whispers dirty things

Imposters

“Thieves enforce rules to prevent their stolen goods from being stolen” underpins every single aspect of what we call society today.

Kid named not bullied enough:

The answer is never show them to anyone and hide it in your butt. That’s where your AI art belongs.

Lol

The sheer chances of the art bot generating something similar purely down to similar prompts would be quite high. Can’t complain about theft if your Frankenstein’s Monster of an image was constructed through theft.

Maybe unpopular opinion, but I think that prop making is an art by itself

prop making, sure, it’s a tangible creation you sanded painted etc…

oh I think you meant to type PROMPT making. Tell you what: type out your most beautiful prompt. Show me the art in great prompting. Please, I want to see this. Totally open to new ideas, blow my mind mate!

Write me a prompt for a large language model that will make it write me a beautiful prompt for a large language model.

'Certainly! Here’s a prompt to generate a beautiful prompt for a large language model:

“Create a thought-provoking and eloquent prompt that would inspire a large language model to craft a piece of writing that captivates and enchants the reader, leaving them in awe of the model’s creativity and linguistic prowess.”’

- GPT-3.5

“Compose a vivid and evocative narrative, rich with descriptive details, that transports the reader to an enchanting forest bathed in the soft, golden hues of the setting sun. Your task is to convey the profound connection between the serene beauty of nature and the human spirit, leaving the reader in a state of tranquil wonder, captivated by your poetic portrayal.”

- GPT-3.5 responding to GPT-3.5

God is like: I don’t understand how any of this shit works either, I just tell the AI stuff and it makes it happen. Watch:

“Make me life.”

“Invent sin.”

“Give the life sin.”

“Ooops, dang, that sinful world needs a flood.”

I mean, I suck at creating images with AI. So there actually is at least some skill to it. Even if you try out stuff at random, knowing what makes a good image is still knowledge that not everyone has (e.g. me)

I just hope ‘prompt’ artists never forget the tools they’re using were built on a corpus of actual artists that were not allowed to opt-out or even compensated to build these tools. That really, really bothers me.