- cross-posted to:

- aicopyright@lemm.ee

- aicopyright@lemm.ee

- cross-posted to:

- aicopyright@lemm.ee

- aicopyright@lemm.ee

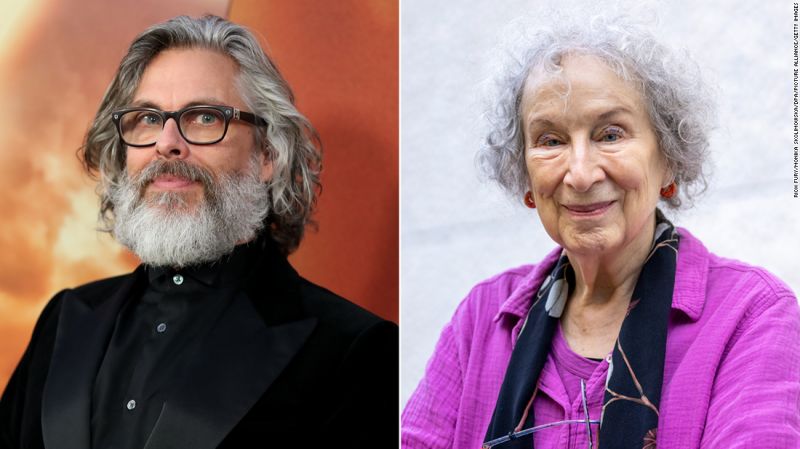

Thousands of authors demand payment from AI companies for use of copyrighted works::Thousands of published authors are requesting payment from tech companies for the use of their copyrighted works in training artificial intelligence tools, marking the latest intellectual property critique to target AI development.

It’s not at all like what humans do. It has no understanding of any concepts whatsoever, it learns nothing. It doesn’t know that it doesn’t know anything even. It’s literally incapable of basic reasoning. It’s essentially taken words and converted them to numbers, and then it examines which string is likely to follow each previous string. When people are writing, they aren’t looking at a huge database of information and determining the most likely word to come next, they’re synthesizing concepts together to create new ones, or building a narrative based on their notes. They understand concepts, they understand definitions. An AI doesn’t, it doesn’t have any conceptual framework, it doesn’t even know what a word is, much less the definition of any of them.

How can you tell that our thoughts don’t come from a biological LLM? Maybe what we conceive as “understanding” is just a feeling emerging from a more fondamental mechanism like temperature emerges from the movement of particles.

Because we have biological, developmental, and psychological science telling us that’s not how higher-level thinking works. Human brains have the ability to function on a sort of autopilot similar to “AI”, but that is not what we are describing when we speak of creative substance.

A huge part of what we do is like drawing from a huge mashup of accumulated patterns though. When an image or phrase pops into your head fully formed, on the basis of things that you have seen and remembered, isn’t that the same sort of thing as what AI does? Even though there are (poorly understood) differences between how humans think and what machine learning models do, the latter seems similar enough to me that most uses should be treated by the same standard for plagiarism; only considered violating if the end product is excessively similar to a specific copyrighted work, and not merely because you saw a copyrighted work and that pattern being in your brain affected what stuff you spontaneously think of.

I didn’t say what you said, that’s a lot of words and concepts you’re attributing to me that I didn’t say.

I’m saying, LLM ingests data in a way it can average it out, in essence it learns it. It’s not wrote memorization, but it’s not truly reasoning either, though it’s approaching it if you consider we might be overestimating human comprehension. It pulls in the data from all the places and uses the data to create new things.

People pull in data over a decade or two, we learn it, then end up writing books, or applying the information to work. They’re smart and valuable people and we’re glad they read everyone’s books.

The LLM ingests the data and uses the statistics behind it to do work, the world is ending.

I think you underestimate the reasoning power of these AIs. They can write code, they can teach math, they can even learn math.

I’ve been using GPT4 as a math tutor while learning linear algebra, and I also use a text book. The text book told me that (to write it out) “the column space of matrix A is equal to the column space of matrix A times its own transpose”. So I asked GPT4 if that was true and it said no, GPT disagreed with the text book. This was apparently something that GPT did not memorize and it was not just regurgitating sentences. I told GPT I saw it in a text book, the AI said “sorry, the textbook must be wrong”. I then explained the mathematical proof to the AI, and the AI apologized, admitted it had been wrong, and agreed with the proof. Only after hearing the proof did the AI agree with the text book. This is some pretty advanced reasoning.

I performed that experiment a few times and it played out mostly the same. I experimented with giving the AI a flawed proof (I purposely made mistakes in the mathematical proofs), and the AI would call out my mistakes and would not be convinced by faulty proofs.

A standard that judged this AI to have “no understanding of any concepts whatsoever”, would also conclude the same thing if applied to most humans.

They can write code and teach maths because it’s read people doing the exact same stuff

Hey, that’s the same reason I can write code and do maths!

I’m serious, the only reason I know how to code or do math is because I learned from other people, mostly by reading. It’s the only reason I can do those things.

It’s just a really big autocomplete system. It has no thought, no reason, no sense of self or anything, really.

I guess I agree with some of that. It’s mostly a matter of definition though. Yes, if you define those terms in such a way that AI cannot fulfill them, then AI will not have them (according to your definition).

But yes, we know the AI is not “thinking” or “scheming”, because it just sits there doing nothing when it’s not answering a question. We can see that no computation is happening. So no thought. Sense of self… probably not, depends on definition. Reason? Depends on your definition. Yes, we know they are not like humans, they are computers, but they are capable of many things which we thought only humans could do 6 months ago.

Since we can’t agree on definitions I will simply avoid all those words and say that state-of-the-art LLMs can receive text and make free form, logical, and correct conclusions based upon that text at a level roughly equal to human ability. They are capable of combining ideas together that have never been combined by humans, but yet are satisfying to humans. They can invent things that never appeared in their training data, but yet make sense to humans. They are capable of quickly adapting to new data within their context, you can give them information about a programming language they’ve never encountered before (not in their training data), and they can make correct suggestions about that programming language.

I know you can find lots of anecdotes about LLMs / GPT doing dumb things, but most of those were GPT3 which is no longer state-of-the-art.