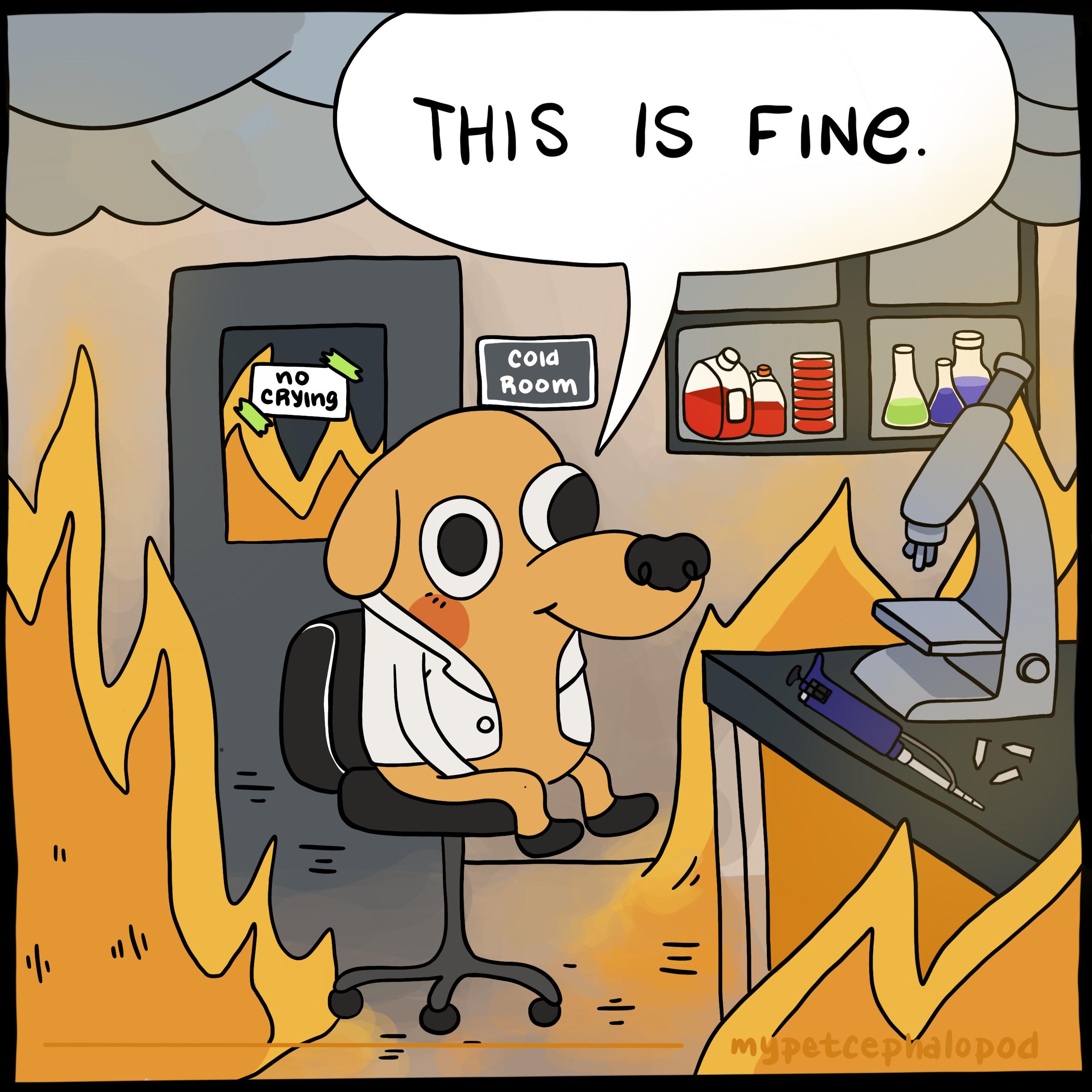

Pshh, I’m working on an AI blockchain cloud based customer first smart learning adaptive agile Air Fryer that will blow the competition away.

I had to run that through Bing AI real quick lol

Ataliative 😮

Adetvi Learning 😲

Blowv the ciompetittio 🤯

BlocklBerach

Clould frist lustion

Agíee

Clould FRIST

Edit: Fixed

I’m surprised it’s able to make readable text.

Blovw the competittio

Blovw it hard!

Image generation models are generally more than capable of doing that they’re just not trained to do it.

That is, just doing a bit of hand-holding and showing SDXL appropriately tagged images and you get quite sensible results. Under normal circumstances it just simply doesn’t get to associate any input tokens with the text in the pixels because people rarely if ever describe, verbatim, what’s written in an image. “Hooters” is an exception, hard to find a model on Civitai that can’t spell it.

The worst part is that it now tries to add text to a whole lot of pictures

Yeah it’s been improving over the past few months. It’s hit or miss though

I like to post sometimes on the “guess the song” AI communities, but more often than not, the Bing image creator just plast the lyrics on the image making it useless for the game.

Yeah these look exactly like things I’d see on billboards in Vegas when certain conventions are in town…

I will probably use these images in a corporate PowerPoint. I’m not asking for your permission, I’m warning you. Sorry, it’s too good. (I will credit you as a CTO of some company ending in -SYS or - LEA if you want)

Lol go for it just send me a screenshot of the slide!

Ahh I miss the days when people who knew and used generative AI understood they are best for shits and giggles.

Please tell me it has individually packaged chicken nugget pods with DRM and more plastic waste than food 🤤

and you can only control it through our official mobile app!

Same but better

Does it have a voice assistant so it can be voice controlled and totally not to violate our privacy?

That’s some real blue-sky thinking

Boy do I have the product for you: https://youtu.be/F_HOrMmWoMA?si=sNxyxbwaKOnZiPqW

Nah, I’m only interested in deep learning

I’m in.

Me too, but I make pathfinding algorithms for video game characters. The truly classic Artificial Intelligence.

I appreciate your work. Rock and Stone.

Did I hear a Rock and Stone!?

I still remember fighting grunts in the original half life for the first time and being blown away. Your work makes games great!

It’s been a while since I looked at how Valve does it but it could be called a primitive expert system. And while the HL1 grunts were extraordinary for their time, HL2’s combine grunts are still pretty much the gold standard. Without the AI leaking information to the player via radio chatter it would feel very much like the AI is cheating because yes, HL2’s grunts are better at tactics than 99.99% of humans. It also helps that you’re a bullet sponge so them outsmarting you, like leading you into an ambush, doesn’t necessarily mean that you’re done for.

OTOH they’re a couple of pages of state-machines that would have no idea what to do in the real world.

Also, for the record: “AI” in gamedev basically means “autonomous agent in the game world not controlled by the player”. A “follow the ball” algorithm (hardly can be called that) playing pong against you is AI in that sense. Machine learning approaches are quite rare, and if then you’d use something like NEAT, not the gazillion-parameter neutral nets used for LLMs and diffusion models. If you tell NEAT to, say, drive a virtual car it’ll spit out a network with a couple of neurons, and be very good at doing that but be useless for anything else but that doesn’t matter you have an enemy AI for your racer. Which probably is even too good, again, so you have to nerf it.

TIL OTOH is short for On the Other Hand

Is it? I always thought it was Off The Top Of my Head for some reason.

Could be, idunno, I just googled it.

<sweats in Opposing Force>

So many walls being ran into over the years!

LLMs (or really ChatGPT and MS Copilot) having hijacked the term “AI” is really annoying.

In more than one questionnaire or discussion:

Q: “Do you use AI at work?”

A: “Yes, I make and train CNN (find and label items in images) models etc.”

Q: “How has AI influenced your productivity at work?”

A: ???

Can’t mention AI or machine learning in public without people instantly thinking about LLM.

I imagine this is how everyone who worked in cryptography felt once cryptocurrency claimed the word “crypto”

Luckily that was only the abbreviation and not the actual word. I know that language changes all the time, constantly, but I still find it annoying when a properly established and widely (within reason) used term gets appropriated and hijacked.

I mean, I guess it happens all the time in with fiction, and in sciences you sometimes run into a situation where an old term just does not fit new observations, but please keep your slimy, grubby, way-too-adhesive, klepto-grappers away from my perfectly fine professional umbrella terms. :(

Please excuse my rant.

I’m still mad that ML was stolen and doesn’t make people think about the ML family of programming languages anymore.

The term machine learning was coined in 1959 by Arthur Samuel, an IBM employee and pioneer in the field of computer gaming and artificial intelligence.[9][10] The synonym self-teaching computers was also used in this time period.[11][12]

https://en.m.wikipedia.org/wiki/Machine_learning

It wasn’t so much stolen as taken back.

I had a first stage interview with a large multinational construction company where I’d be “the only person in the organization sanctioned to use ai”

they meant: use chatgpt to generate blogs

“That’s some high security clearance to have a computer rapidly tap auto-complete for entire paragraphs, hoss…wait it pays how much?(Ahem) I shall take this solemn responsibility of the highest order so very seriously!” Lol

We are just taking “crypto” back to mean something useful. It was just a matter of some stupid people losing enough money.

I hope in a few years we can take “AI” back too.

“AI” will never shake the connotations science fiction has given it. The association is always going to skew towards positronic brains and Commander Data.

In the world of Actual Machines, “AI” is a term that should barely be tolerated in advertising departments, let alone anything remotely close to R&D

I still think AI has its place as a useful term in video games development

AI will be taken back.

spoiler

by avian influenza

Investors are such emotionally led beings

They’re just betting on what will get bailed out because of their own bribes. It’s pure feedback at this point; all noise and no signal.

As an older developer, you could replace “machine learning” with “statistical modeling” and “artificial intelligence” with “machine learning”.

“I’m into if statements lately”

“It’s the same picture.”

I think people are hesitant to call ML “statistical modeling” because traditional statistical models approximate the underlying phenomena; e.g., a logarithmic regression would only be used to study logarithmic phenomena. ML models, by contrast, seldom resemble what they’re actually modeling.

fuzzy logic

I hear this all the time in my field.

“Can you just fuzzy match the records between the systems?”

We used to distinguish AI as automatically / programmatically making a decision based on an ML model, but I’m guilty of calling it AI for wow factor, lol.

Now I have to be careful because AI = LLMs in common language .

My old coworker used to say this all the time back around 2018:

"What’s the difference between AI and machine learning?

Machine learning is done in Python. AI is done in PowerPoint."

There needs to be a new taunting community for this mlm similar to the one for #buttcoin.

!fuck_ai@lemmy.world and sometimes !sneerclub@awful.systems

AI is a common topic on !techtakes@awful.systems (the same instance has !buttcoin@awful.systems)

Hopefully this one will age better than Buttcoin (est 2011) though.

Machine learning is a subset of artificial intelligence, so I don’t see anything wrong here. The character’s using a more generic term when talking to a layperson.

I think the point that they’re making is that they used the latest buzz word for the people dishing out the dough.

Yes, and I’m saying there’s nothing wrong with that “buzz word.” It’s accurate, just more generic.

I see a lot of people these days raising objections that LLMs and whatnot “aren’t really artificial intelligence!” Because they’re operating from the definition of artificial intelligence they got from science fiction TV shows, where it’s not AI unless it replicates or exceeds human intelligence in all meaningful ways. The term has been widely used in computer science for 70 years, though, applying to a broad range of subjects. Machine learning is clearly within that range.

There’s a distinction into “narrow AI” and “Artificial General Intelligence”.

AGI is that sci-fi AI. Whereas narrow AI is only intelligent within one task, like a pocket calculator or a robot arm or an LLM.

And as you point out, saying that you’re doing narrow AI is absolutely not interesting. So, I think, it’s fair enough that people would assume, when “AI” is used as a buzzword, it doesn’t mean the pocket calculator kind.

Not to mention that e.g. OpenAI explicitly states that they’re working towards AGI.

If I built a robot pigeon that can fly, scavenge for crumbs, sing matings calls, and approximate sex with other pigeons, is that an AGI? It can’t read or write or talk or compose music or draw or paint or do math or use the scientific method or debate philosophy. But it can do everything a pigeon can. Is it general or not? And if it’s not, what makes human intelligence general in a way that pigeon intelligence isn’t?

Whenever people say “AI”, I like to mentally insert an M, G and C: ✨Magic✨

Or as it’s also known:

✨I don’t want to explain what I actually did, so here’s a meaningless word to stop you asking questions.✨One guy spends a summer implementing a backprop algorithm in CUDA and now my mom thinks butterflies are stealing her blood at night.

Idea for future D&D side plot: butterflies are stealing blood at night.

“Crimson Lepids”

They should have blood-red wings and be familiars of Psyche cultists.

Prompt Engineer: “I am a machine teacher.”

This should be at !programmer_humor@programming.dev